前言

本文用于记录古珊用于处理的相关代码。

2023-05-17_转换NEON-NCAR的hdf5格式GPP数据为csv

# -*- coding: utf-8 -*-

import h5py

import csv

hdf5file = 'D:/PythonLib/NEON.D08.LENO.DP4.00200.001.nsae.2017-08-01.expanded.20221207T142827Z.h5'

output_file = 'nsae.csv'

filename = hdf5file

with h5py.File(filename, 'r') as f:

leno_grp = f['LENO']

dp04_grp = leno_grp['dp04']

data_grp = dp04_grp['data']

fluxCo2_grp = data_grp['fluxCo2']

if 'nsae' in fluxCo2_grp:

nsae_dataset = fluxCo2_grp['stor']

print("nsae 变量的值:")

print(nsae_dataset[:])

# 将数据写入CSV文件

with open(output_file, 'w', newline='') as csvfile:

writer = csv.writer(csvfile)

# 写入表头

writer.writerow(['nsae'])

# 写入数据

writer.writerows(nsae_dataset)

print("数据已成功写入CSV文件:", output_file)

else:

print("未找到 nsae 变量")

2023-05-23_转换NEON-NCAR的netcdf格式GPP文件数据为csv

import os

import pandas as pd

from netCDF4 import Dataset, num2date

# 定义输入和输出文件路径

input_path = 'D:/CMAQ/TALL/' # 替换为你的输入文件路径

output_file = 'D:/CMAQ/TALL/TALL.csv' # 替换为你的输出CSV文件路径

# 定义变量名称和时间范围

variable_name = 'GPP'

start_date = '2018-01-01'

end_date = '2022-04-30'

# 创建空的DataFrame来保存数据

data = pd.DataFrame(columns=['Time', variable_name])

# 遍历每个输入文件

for year in range(2018, 2023):

if year == 2022:

max_month = 5 # 2022年只到4月份

else:

max_month = 13 # 其他年份遍历到12月份

for month in range(1, max_month):

file_name = f'TALL_eval_{year:04d}-{month:02d}.nc'

file_path = os.path.join(input_path, file_name)

# 使用os.path.join正确连接路径和文件名

# 检查文件是否存在

if not os.path.isfile(file_path):

continue

# 打开NetCDF文件

nc = Dataset(file_path)

# 读取时间变量和GPP变量

time_var = nc.variables['time']

gpp_var = nc.variables['GPP'][:,0,0]

# 将时间变量转换为日期时间对象

times = num2date(time_var[:], time_var.units, time_var.calendar)

# 筛选在时间范围内的数据

mask = (times >= pd.to_datetime(start_date)) & (times <= pd.to_datetime(end_date))

selected_times = times[mask]

selected_gpp = gpp_var[mask]

# 将数据添加到DataFrame中

df = pd.DataFrame({variable_name: selected_gpp}, index=selected_times)

data = data.append(df)

# 关闭NetCDF文件

nc.close()

# 将DataFrame保存为CSV文件

data.to_csv(output_file)

2023-06-17-转换AERONET的站点观测AOD数据为csv

注意下载的AERONET文件没有后缀名,因此直接用notepad++打开然后删除前面几行的文件说明使得文件的第一行即为标题行,然后进行如下操作

import csv

from datetime import datetime

def convert_date(date_string):

date_object = datetime.strptime(date_string, "%d:%m:%Y")

return date_object.strftime("%Y/%m/%d")

input_file = 'D:/20170101_20231231_NEON_LENO.lev10'

output_file = 'D:/20170101_20231231_NEON_LENO.lev10.csv'

# 读取逗号分隔的文件并写入转换后的数据到新文件

with open(input_file, 'r') as file_in, open(output_file, 'w', newline='') as file_out:

reader = csv.reader(file_in)

writer = csv.writer(file_out)

header = next(reader) # 读取并保留标题行

header.insert(0, 'converted_date') # 在标题行中插入新列的标题

writer.writerow(header) # 写入标题行到新文件

for row in reader:

original_date = row[0]

converted_date = convert_date(original_date)

row.insert(0, converted_date) # 在每一行的开头插入转换后的日期

writer.writerow(row) # 写入转换后的行数据到新文件

运行以上代码后即可生成csv文件并完成第1列的日期格式转换.接下来打开生成的csv文件将converted_date列的列标题替换为Date(dd:mm:yyyy)并删除第2列

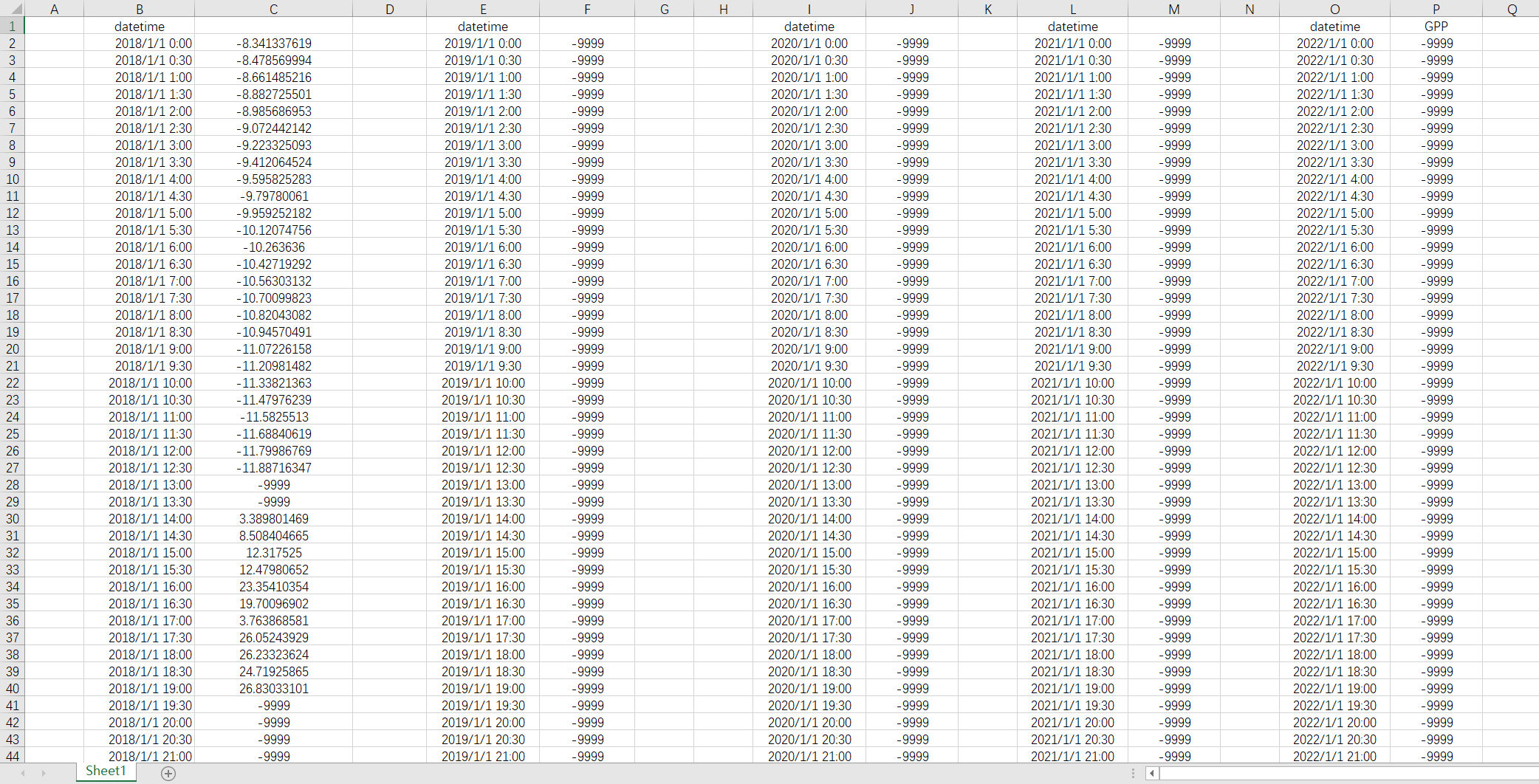

接下来修改2018-2022_LENO_GPP.xlsx文件中的行标题使其GPP列仅标注于最后一列,如下图所示

接下来执行以下python程序以计算每半个小时内AERONET观测到的AOD均值并输出到新文件中

import pandas as pd

import numpy as np

from pandas.tseries.frequencies import to_offset

# 读取数据1 - 站点4

table7 = pd.read_excel('./2018-2022_LENO_GPP.xlsx')

table8 = pd.read_csv('./20170101_20231231_NEON_LENO.lev10.csv')

# 站点4

table7_sub1 = table7.iloc[:,1:3].loc[lambda x : x['datetime'].notnull()].rename(columns={'Unnamed: 2':'GPP'})

table7_sub2 = table7.iloc[:,4:6].loc[lambda x : x['datetime.1'].notnull()].rename(columns={'datetime.1':'datetime','Unnamed: 5':'GPP'})

table7_sub3 = table7.iloc[:,8:10].loc[lambda x : x['datetime.2'].notnull()].rename(columns={'datetime.2':'datetime','Unnamed: 9':'GPP'})

table7_sub4 = table7.iloc[:,11:13].loc[lambda x : x['datetime.3'].notnull()].rename(columns={'datetime.3':'datetime','Unnamed: 12':'GPP'})

table7_sub5 = table7.iloc[:,14:16].loc[lambda x : x['datetime.4'].notnull()].rename(columns={'datetime.4':'datetime'})

left4 = pd.concat([table7_sub1,table7_sub2,table7_sub3,table7_sub4,table7_sub5],ignore_index=True)

# 去除left4中datetime的毫秒

left4['datetime'] = pd.to_datetime(left4['datetime'].apply(lambda x : x.strftime('%Y-%m-%d %H:%M:%S')))

right4 = (

table8

.assign(dt = lambda x : x.loc[:,'Date(dd:mm:yyyy)'].str.cat(x.loc[:,'Time(hh:mm:ss)'],sep=' '))

# 2017/1/10 18:57:48

.assign(dt = lambda x : pd.to_datetime(x.loc[:,'dt'],format='%Y/%m/%d %H:%M:%S'))

.assign(dt = lambda x : x.dt.apply(lambda x : x.strftime('%Y-%m-%d %H:%M:%S')))

.assign(dt = lambda x : pd.to_datetime(x.loc[:,'dt']))

.resample('30min',on='dt',loffset='15min')

.agg({'Date(dd:mm:yyyy)': 'count', 'AOD_500nm': 'mean'})

)

right4.index = right4.index + to_offset('15min')

result4 = (

pd.merge(left4,right4,left_on='datetime',right_index=True,how='left')

.rename(columns={'Date(dd:mm:yyyy)':'count','AOD_500nm':'AOD_500nm_mean'})

)

result4.astype({'count':'Int64'}).to_csv('./LENO-AERONET-AOD.csv',index=False)

完成以上步骤即可得到最终输出的站点的AERONET-AOD与GPP数据文件.

2023-06-17-将UTC时间转换为阿拉巴马州的当地时间

import csv

from datetime import datetime, timedelta

import pytz

# 将UTC时间转换为阿拉巴马州的当地时间

def convert_to_local_time(utc_time):

utc = pytz.timezone('UTC')

local_tz = pytz.timezone('US/Central') # 阿拉巴马州的时区

utc_dt = datetime.strptime(utc_time, '%Y/%m/%d %H:%M')

utc_dt = utc_dt.replace(tzinfo=utc)

local_dt = utc_dt.astimezone(local_tz)

return local_dt.strftime('%Y/%m/%d %H:%M')

# 读取CSV文件

with open('merged.csv', 'r') as input_file, open('final-merged-PAR.csv', 'w', newline='') as output_file:

reader = csv.DictReader(input_file)

fieldnames = reader.fieldnames + ['localtime']

writer = csv.DictWriter(output_file, fieldnames=fieldnames)

writer.writeheader()

for row in reader:

utc_time = row['endDateTime'] # 假设CSV文件中的时间列名为'datetime'

local_time = convert_to_local_time(utc_time)

row['localtime'] = local_time

writer.writerow(row)

print("done")

2023-06-17-针对AmeriFlux文件中的时间列进行处理转换

import csv

from datetime import datetime

input_file = './AMF_US-xTA_FLUXNET_FULLSET_HH_2017-2021_3-5.csv'

output_file = './AMF_US-xTA_FLUXNET_FULLSET_HH_2017-2021_3-5-date-converted.csv'

# 打开输入CSV文件

with open(input_file, 'r') as input_file:

reader = csv.DictReader(input_file)

# 读取标题行

header = reader.fieldnames

# 跳过标题行

next(reader)

# 创建输出CSV文件并写入标题行

with open(output_file, 'w', newline='') as output_file:

writer = csv.writer(output_file)

writer.writerow(header + ['Converted_Date'])

for row in reader:

# 获取原始日期字符串并删除空格

timestamp = row['TIMESTAMP_END'].replace(' ', '')

# 解析原始日期字符串为datetime对象

datetime_obj = datetime.strptime(timestamp, '%Y%m%d%H%M')

# 格式化datetime对象为目标日期字符串格式

formatted_date = datetime_obj.strftime('%Y/%m/%d %H:%M')

# 将转换后的日期写入输出文件的当前行

writer.writerow(list(row.values()) + [formatted_date])

#注意AmeriFlux文件里的时间已经是当地时间,并不是UTC时间(可以从GPP与气温的日变化直接推断出来)

完成以上操作后,即可将AERONET观测到的AOD均值列添加到AmeriFlux的文件中并另存为xlsx文件(因为csv文件只能有1个sheet,不便于后续操作.)

参考文献及链接

Python for Atmosphere and Ocean Scientists

https://carpentries-lab.github.io/python-aos-lesson/